WSJ Reading and Analysis -1st

本文最后更新于 2025-11-10,墨迹未干时,知识正鲜活。随着时间推移,文章部分内容可能需要重新着墨,请您谅解。Contact

Why ‘Distillation’ Has Become the Scariest Word for AI Companies

Audio

Original Text

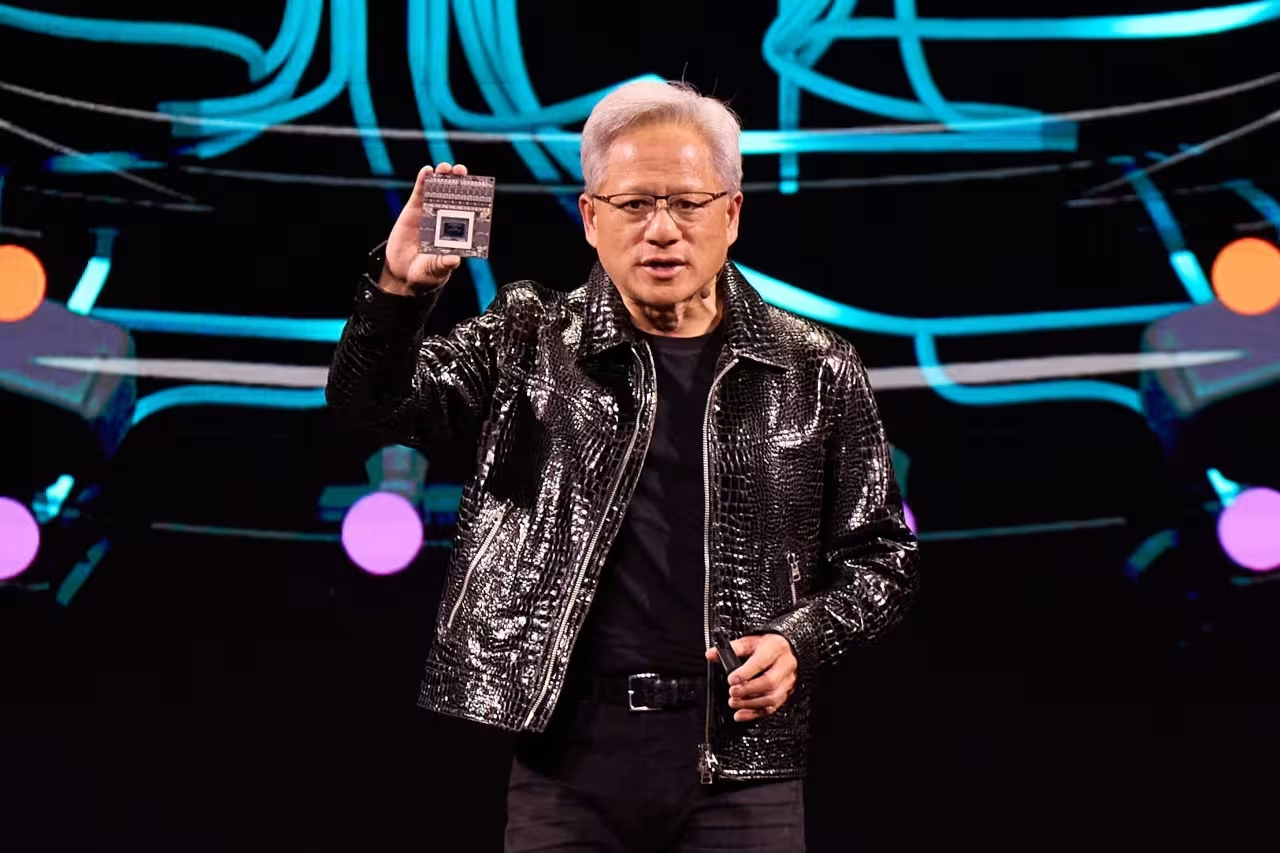

DeepSeek’s success with distillation is raising new doubts about the business models of tech giants and startups spending billions to develop the most advanced AI. Photo: Lam Yik/Bloomberg News

Tech giants have spent billions of dollars on the premise that bigger is better in artificial intelligence. DeepSeek’s breakthrough shows smaller can be just as good.

The Chinese company’s leap into the top ranks of AI makers has sparked heated discussions in Silicon Valley around a process DeepSeek used known as distillation, in which a new system learns from an existing one by asking it hundreds of thousands of questions and analyzing the answers.

”It’s sort of like if you got a couple of hours to interview Einstein and you walk out being almost as knowledgeable as him in physics,” said Ali Ghodsi, chief executive officer of data management company Databricks.

The leading AIs from companies like OpenAI and Anthropic essentially teach themselves from the ground up with huge amounts of raw data—a process that typically takes many months and tens of millions of dollars or more. By drawing on the results of such work, distillation can create a model that is almost as good in a matter of weeks or even days, for substantially less money.

OpenAI said Wednesday that it has seen indications DeepSeek distilled from the AI models that power ChatGPT to build its systems. OpenAI’s terms of service forbid using its AI to develop rival products.

DeepSeek didn’t respond to emails seeking comment.

Distillation isn’t a new idea, but DeepSeek’s success with it is raising new doubts about the business models of tech giants and startups spending billions to develop the most advanced AI, including Google, OpenAI, Anthropic and Elon Musk’s xAI. Just last week, OpenAI announced a partnership with SoftBank and others to invest $500 billion in AI infrastructure over the next five years.

If those investments don’t provide companies with an unbeatable advantage but instead serve as springboards for cheaper rivals, they might become difficult to justify. In the wake of DeepSeek, executives and investors in Silicon Valley are re-examining their business models and questioning whether it still pays to be an industry leader.

“Is it economically fruitful to be on the cutting edge if it costs eight times as much as the fast follower?” said Mike Volpi, a veteran tech executive and venture capitalist who is general partner at Hanabi Capital.

OpenAI CEO Sam Altman on X called DeepSeek’s latest release “an impressive model, particularly around what they’re able to deliver for the price,” and added, “we are excited to continue to execute on our research roadmap.” Anthropic CEO Dario Amodei wrote on his blog that DeepSeek’s flagship model “is not a unique breakthrough or something that fundamentally changes the economics” of advanced AI systems, but rather “an expected point on an ongoing cost reduction curve.”

Tech executives expect to see more high-quality AI applications made with distillation soon. Researchers at AI company Hugging Face began trying to build a model similar to DeepSeek’s last week. “The easiest thing to replicate is the distillation process,” said senior research scientist Lewis Tunstall.

AI models from OpenAI and Google remain ahead of DeepSeek on the most widely used rankings in Silicon Valley. Tech giants are likely to maintain an edge in the most advanced systems because they do the most original research. But many consumers and businesses are happy to use technology that’s a little worse but costs a lot less.

President Trump’s AI czar, David Sacks, said on Fox News on Tuesday that he expects American companies to make it harder to use their models for distillation.

DeepSeek has said it used distillation on open-source AIs released by Meta Platforms and Alibaba in the past, as well as from one of its models to build another. Open-source AI developers typically allow distillation if they are given credit. DeepSeek’s own models are open-source.

NovaSky, a research lab at University of California, Berkeley, this month released technology it said was on par with a recent model released by OpenAI. The NovaSky scientists built it for $450 by distilling an open-source model from Chinese company Alibaba.

The Berkeley researchers released the model as open-source software, and it is already being used to help build more cheap AI technology. One startup, Bespoke Labs, used it to distill DeepSeek’s technology into a new model it said performed well on coding and math problems.

“Distillation as a technique is very effective to add new capabilities to an existing model,” said Ion Stoica, a professor of computer science at UC Berkeley.

Competition in the AI industry is already fierce, and most companies are losing money as they battle for market share. The entry of DeepSeek and others that use distillation could drive prices down further, creating a feedback loop in which it is harder and harder to justify spending huge sums on advanced research.

Prices for software developers accessing AI models from OpenAI and others have fallen dramatically in the past year. Open-source AI such as DeepSeek’s only promises to lower costs further, according to tech executives.

“It will be harder to justify very large margins for this level of intelligence,” said Vipul Ved Prakash, CEO of Together AI, which sells computational services to developers of AI applications.

Write to Miles Kruppa at [email protected] and Deepa Seetharaman at [email protected]

Analysis

Definition and Advantages of Distillation Technology

The article explains that distillation is a process where a new system learns and optimizes itself by asking a large number of questions to an existing system and analyzing its answers. Compared to traditional large-scale AI model training, distillation offers significant advantages in terms of time and cost. For example, while companies like OpenAI and Anthropic usually require months and tens of millions of dollars to train a new model, distillation technology enables companies like DeepSeek to produce models of nearly comparable quality in just weeks—or even days—at a fraction of the cost.

DeepSeek's Success and Its Impact

DeepSeek, a Chinese company, has rapidly climbed into the ranks of top AI manufacturers due to its mastery of distillation technology, prompting significant introspection within Silicon Valley regarding prevailing AI investment strategies. The article suggests that DeepSeek's rise has cast doubt on the conventional "bigger-is-better" philosophy, highlighting the feasibility of smaller, more precise AI models as formidable contenders. This paradigm shift threatens the business models of tech giants that rely on enormous investments to maintain their dominance, such as Google, OpenAI, Anthropic, and Elon Musk's xAI.

Industry Response and Challenges

In response to DeepSeek's disruptive success, the article describes how industry executives and investors are reevaluating their business models. Some industry professionals argue that if distillation technology weakens the monopoly of large corporations in the AI sector and enables cheaper competitors to emerge, the rationale for massive-scale investments will come under scrutiny. For instance, Databricks CEO Ali Ghodsi and venture capitalist Mike Volpi have expressed concerns about current investment strategies and acknowledged the economic advantages of fast followers.

Comparison of Major Companies and Technologies

Despite DeepSeek's groundbreaking progress in distillation, the article notes that major tech companies like OpenAI and Google still lead in popular AI rankings due to their dominance in cutting-edge research. However, for many consumers and businesses, slightly less advanced yet significantly more affordable technologies are already sufficient to meet their needs. This creates opportunities for distillation-based techniques to achieve wider adoption.

Policy and Ethical Considerations

As companies like DeepSeek rise to prominence, the article highlights growing attention from policymakers regarding potential issues posed by distillation technology. Former Trump administration AI advisor David Sacks remarks that U.S. companies are likely to implement measures to prevent their models from being used for distillation. This suggests that regulatory frameworks may emerge to protect the interests and technological advantages of major corporations.

Future Outlook and Market Competition

The article predicts that the proliferation of distillation technology will drive the emergence of more high-quality yet low-cost AI applications. This trend could intensify market competition, further driving down prices and creating a feedback loop that makes large-scale R&D investments increasingly unsustainable. Additionally, the rising popularity of open-source AI models, like those developed by DeepSeek and UC Berkeley's NovaSky project, will accelerate the democratization of AI technology and cost reduction.